October 11, 2023

In this recent article by Hoogland et al, the authors have proposed combining flexible parametric survival modeling with regularization in order to improve risk prediction modeling for time-to-event data and, thus, they made a unified regularation approach for log hazard and cumulative hazard models avalable in an R package, regsurv. For the log hazard (cumulative) approach, they focused on Royston and Parmar (2002) use of restricted cubic splines with natural log of time based on truncated power bases. They also showed that one can have time-varying effects, even with the spline basis functions, which has been shown before. They included regularization methods like elastic net, ridge, and group lasso. They implemented k-fold cross-validation over a grid of potential tuning parametes to chose the optimal one, although they did admit that it was computationally intensive.

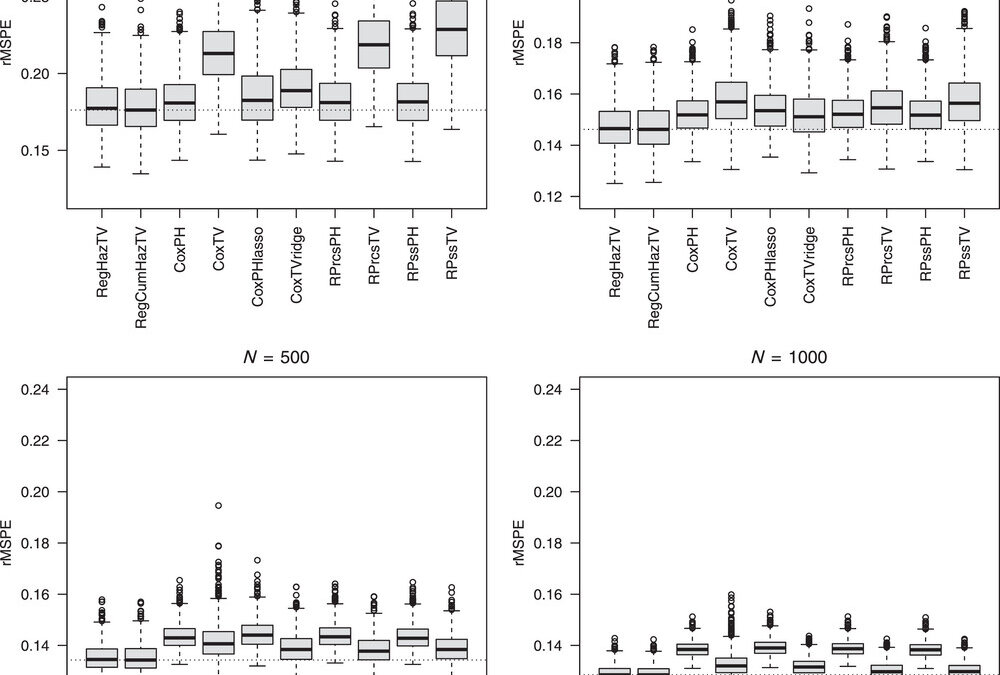

In order to test their methods, they employed a two-component Weibull mixture model to generate sufficient survival data. They had set this up to have a non-monotone hazard but I question why they did not employ sampling from another survival distribution that already has non-monotone hazards? In any case, they then compared many different iterations of survival models with and without regularization from log cumulative hazard models, Cox models, to Royston-Parmar proportional hazards models. They measured prediction accuracy as the root mean squared prediction error which is difference in predicted survival probability and true survival probability. They also redefined Harrell’s c-statistic for the discriminative ability in their version of predicted probabilities. Although others have redefined this before for survival, the authors did not seem to do a literature search and find the other references. Finally they assessed calibration performance graphically.

In their simulations, their regularized hazard and cumulative hazard models time-varying effects were best performing. They found similar performance in a real data application. Regarding their R package, it was deveoped for fully parametric models. They admit though this comes at a cost of misspecification with any fully parametric model. Also, their package inolves more computational time as compared to glmnet and penalized, which were both developed on Cox models. They do admit in their discussion that sample size plays a role for sure and could limit use of their method. However, their methods can be useful in the situation with time-varying effects since the regularization part helps to reduce down variability for prediction estimation.

Written by,

Usha Govindarajulu, PhD

Keywords: survival, regularization, lasso, ridge, elastic net, log hazard, time-varying, R software

References

Hoogland J, Debray TPA, Crowther MJ, Riley RD, Inthout J, Reitsma JB, and Zwinderman AH (2023). “Regularized parametric survival modeling to improve risk prediction models”. Biometrical Journal.

https://doi.org/10.1002/bimj.202200319

https://onlinelibrary.wiley.com/cms/asset/1349e2cc-038f-4649-a0ba-5653d1c0a120/bimj2529-fig-0001-m.jpg